Facebook the Blind Kingmaker: how and why Big Tech "helps" you choose your president

We've long known that social networks can impact our choice of a car, a movie to watch, or a trip to plan. There are ads that tell us what to buy, there are recommendations and suggestions, there is posts priority in the newsfeeds that imperceptibly dictate our behavior.

But can algorithms make us vote for one presidential candidate over another? Yes, they actually can, as we will illustrate below.

And the worst part is — nobody knows how exactly. And why, and what for, and what'll happen next. Bad news for conspiracy theories' lovers: there's most probably no World Government, there are just a bunch of algorithms that are not yet good enough for their own developers to be sure about how they work.

What do we users should do with all that? How exactly do social media impact our choices, both in private and in social life?

Of course it's not only Facebook that has an impact, but we are emphasising Facebook here because of the obvious reasons: the largest social network of the world with the most diverse coverage of users worldwide; the most critisized social network of the world (although some will claim that the Chinese social networks ones can compete); the most scrutinized social network of the world. But before we start tackling Facebook, let's browse some Twitter.

Mark Zuckerberg, look what you've done

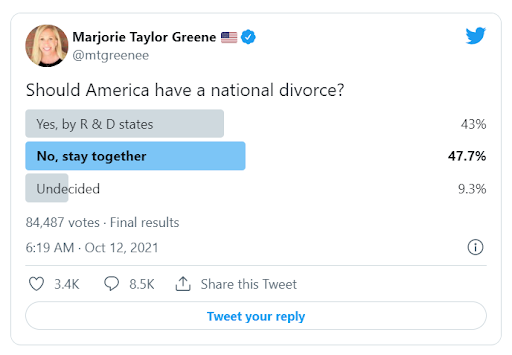

Facebook is being blamed for no less than "national divorce", a fatal splitting of the US in uneven halves. This can happen, according to the results of a recent Twitter poll posted by Rep. Marjorie Taylor Greene.

43% want the country divided between Republican and Democrat states. Just a little more, 47%, voted for "Stay together".

Greene noted that "Twitter was a "very hard-left" platform and therefore was biased". She said that initially, the poll results were mostly in favor of, "yes", but within days "the left attacked it" and worked to drive the numbers down.

We wonder whether Twitter's founders' and executives' political views have something to do with that.

There is actually plenty of scientific research stating that Facebook is a driver of citizens' political polarization, and not only in the US.

By the way, the former US President Donald Trump is making a third attempt to launch his own social network. BBC reported some time ago that a twitter-like service called TRUTH Social would "stand up to the tyranny of big tech". There will be "truths" instead of tweets, and they can be "retruthed".

Of course Donald Trump wants to use the potential of social media, the tools they provide for a person to address urbi and orbi. The problem is, since the presidential campaign of Barack Obama major social media started viewing themselves not as tools but as masters.

Mr Obama had been the first US president to fully utilize the power of social media in favor of his campaign. But that time it was a one-way street. Trump's campaign became the turning point: both the society and the "big tech" recognized the

"big tech" as a political actor.

There is a little problem here: a wannabe-president must at least pretend to act in the interests of the country and the people, and must report to them. "Big Tech" however acts only in the interests of beneficiaries and shareholders.

Facebook the politician: involuntary, inpredictable, uncontrollable.

We doubt that Mark Zuckerberg originally wanted his company to be a political actor. He would probably even like to avoid turning Facebook into a platform for political activity, campaigning and propaganda, but it was hardly possible. A company of Facebook's scale and impact can't stay out of politics. And it does not: Facebook spent more on lobbying than any other Big Tech company in 2020. For example, Google spent 36.2% less on lobbying in 2020 compared with 2019.

But the problem is, Facebook is now a giant black box stuffed with AI algorithms. Data goes in, is processed, and the results are often unexpected and unexplainable for its very creators. An army of moderators struggles with correcting algorithmic mistakes manually but loses this battle all the time.

And it's not just Facebook. And not just the USA.

In October 2021 the developers of Twitter had a good reason to scratch their heads and think about how their version of AI black box actually works. As we've mentioned above, Twitter is considered to be "left". But… the company's "in-depth analysis of whether our recommendation algorithms amplify political content" showed that the algorithms actually do, and in an unexpected way. Twitter's creators don't know why.

*In six out of seven researched countries — Canada, France, Japan, Spain, the United Kingdom, and the United States, all but Germany — Tweets posted by accounts from the political right receive more algorithmic amplification than the political left when studied as a group.

Right-leaning news outlets, as defined by the independent organizations listed above, see greater algorithmic amplification on Twitter compared to left-leaning news outlets.

How do social platforms actually influence elections?

There are three things they do:

- Allow politicians and media to use the services of the platform in order to communicate with people: officially, by supporting functions like public pages and groups. And unofficially — for example, selling data to Cambridge Analytics and the like.

- Follow their own agenda — probably. We don't know that. But they have reasons, and they certainly have possibilities.

- Try to control and understand the work of AI algorithms that can develop an agenda of their own.

Facebook is not transparent, Facebook is not united inside. Facebook has started recently to limit the access of employees to inner documents and tools they do not need for their immediate duties. Facebook keeps telling us that all that they care about is the happiness of people and the bright future of the world.

We are already a little tired of it. A company with a perfect founder who only eats the animals he killed with his own hands. With a perfect life, a perfect wife, wonderful kids, and a funny dog. Donating left and right, always feeling excited and amazed… The founder, who lied to Congress and refused to share data with researchers. Should we trust his robots?

The Democratic press keeps accusing Facebook of Republicans support and vice versa. Who do they actually support, and what part do users play in this spectacle?

Big social networks already control how we perceive the world and act about it. And now their own developers stop understanding how. But maybe it is still not too late to stop the process and take control over it?

But the untransparency of corporations and their algorithms is not the only aspect of the issue. Robots make mistakes. They invent labels for posts about COVID and put them on absolutely covid-irrelevant posts irritating people and creating label-blindness. They mark news as fake and thus draw more attention to them.

They fight nipples in FB and Instagram, even those painted by the Renaissance superstars and displayed in the world's best museums. They ban posts about onions because their robots find them too sexy. Everybody is prone to mistakes, especially robots, but it seems weird that a company that is now building a metaverse can't tell an onion from a boob.

It'll be getting worse.

The Brookings Institution in Washington, DC. that studies social issues states:

Our central conclusion, based on a review of more than 50 social science studies and interviews with more than 40 academics, policy experts, activists, and current and former industry people, is that platforms like Facebook, YouTube, and Twitter likely are not the root causes of political polarization, but they do exacerbate it.

Clarifying this point is important for two reasons. First, Facebook’s disavowals, in congressional testimony and other public statements, may have clouded the issue in the minds of lawmakers and the public. Second, as the country <...> turns its attention to elections in 2022, 2024, and beyond, understanding the harmful role popular tech platforms can play in U.S. politics should be an urgent priority.

But what's for us to do?

Explore the list of cognitive biases. Take your time. Make a habit of checking if you are thinking rationally or being manipulated.

Remember that a social network is not a playground in your backyard, it's an active and selfish play partner who is always a part of your communication and self-expression.

Turn ads off. They are the most straightforward tool to manipulate you. Thanks for the honesty, but we'll pass. Manipulations work even when you recognize them.

Switch to chronological display of posts and tweets. Zuckerberg will be constantly switching you back to Top Posts from Most Recent. And keep changing the Most Recent's button place in the interface, but don't give up.

Reduce your use of social media. Yes, there is enough research about its positive effects on relationship and connection with friends and family, but there is also enough research about harmful impact. But Facebook and its likes do not exist long enough for solid science to understand properly its numerous and unobvious effects on people. Try some form of digital detox — people who did so often find it beneficial. And the more anxious the situation is (like, say, presidential election) — the more benefits you get from keeping away from the drivers of anxiety powered up by robots made to make money.

Probably you should listen to Mark Zuckerberg! Let's imagine that he's sincere — and we should actually be using Facebook for staying in touch with our relatives and friends, sharing some precious life moments like walking your minipig in a red-and-golden autumn park. After all, maybe getting political and discussing politics-related news on Facebook might actually be bad for your mental health?