Reddit, AI’s lookalikes, US govt vs Apple and fake ads galore. AdGuard’s digest

In this edition of AdGuard’s digest: Americans want control of their data back, Reddit suffers a hack, researchers prove AI is a privacy threat, the US government wants Apple to open up, and Google search ad bandits target Amazon.

Americans do not want to ‘pay’ for services with their data — survey

The majority of Americans want to control what marketers can learn about them, but don’t believe they can, and have little idea what tools they can use to protect their data. According to a recent report by the Annenberg School for Communication at the University of Pennsylvania, 80% of Americans believe it is “naive” to think they can reliably protect their personal information from being collected by marketers.

At the same time, the overwhelming majority of Americans reject the status quo of receiving ‘free services’ or something of value in exchange for their personal information. 88% disagree that companies should be able to freely collect information about them without their knowledge in exchange for a discount. 61% don’t think it’s right for shops to create their detailed profiles on the pretext of improving their service. And 68% believe that stores should not use in-store WiFi to monitor their online behavior as they wander the aisles. The researchers also found that many respondents do not have a clear understanding of how tracking works. For example, 45% wrongly believe that a smart TV vendor cannot help advertisers send ads to users’ smartphones based on their TV viewing history.

The findings suggest that Americans are increasingly concerned about their data being collected without their explicit permission, but are confused about how far advertisers’ tentacles can reach. They also suggest that people no longer accept that they have to pay with their data to use a “free service.” This shift in attitude is encouraging and will hopefully lead to a change in the way advertisers, and especially Big Tech, treat users. We have long argued that, contrary to popular and misguided belief, our personal data is not worthless. In fact, it’s a hot commodity on the data market, generating huge profits for big companies. And it’s only fair to take back control of it.

Hackers leave Reddit red-faced after source code stolen in a phishing attack

Reddit, the fabled front page of the Internet with over 50 million users, has had its source code and internal documents stolen in a phishing attack. The attack took place on February, 5, with hackers targeting Reddit employees with “plausible-sounding prompts” to lure them to a clone of Reddit’s intranet, the company’s private network. Reddit’s CTO Christopher Slowe revealed that one employee fell for the ruse, which allowed hackers to steal his credentials and gain access to the company’s code, some internal documents, as well as some internal dashboards and business tools. Slowe also revealed that “limited contact information” of hundreds of Reddit’s employees and contacts was exposed. However, Reddit assured that regular users’ passwords and accounts appear to be “safe” so far.

Still, Slowe urged users to enable two-factor authentication for better account protection, change passwords every few months, and use password managers. In fact, these are some of the basic rules of digital hygiene that everyone should follow. See our digital hygiene cheat sheet for more. On a broader scale, it’s unfortunate that yet another company has been hacked and humans have once again proven to be the weakest link in the system. The only thing we can do to prevent such incidents is to raise awareness of these types of attacks among employees so that they can recognise potential red flags and educate them about cybersecurity rules.

AI image generators can ‘reproduce’ training images, but there’s a catch

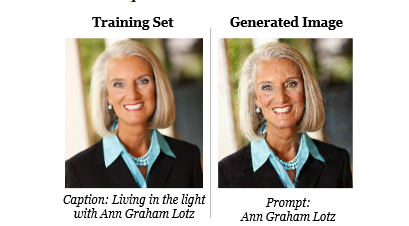

Our digest would not be complete without the buzzword of the moment: AI and its models. A motley crew of researchers from Google, DeepMind, UC Berkeley, Princeton and ETH Zurich have found that AI-powered image-to-text generators such as Stable Diffusion and Google Imogen can memorize images from their training data. Put simply, this means that these larger-than-life models, which are trained on huge amounts of publicly available data, are able to produce an image that is almost identical to one they once scraped.

Source: Extracting Training Data from Diffusion Models, Carlini et al.

Given that the people whose images serve as training material for these models have never given permission for them to be used for this purpose, this is a serious privacy risk. Imagine that your image (well, not yours, but one that looks identical to yours to the naked eye) is being used to promote some shady product, and you can’t do anything about it, not even sue?

The catch is that the chances of your image being reproduced by AI are currently quite low. The researchers who tested 350,000 of the most duplicated images in the Stable Diffusion dataset found only 94 direct matches and 109 near matches to the training data (0.03 per cent). The figure for Google Imogen was slightly higher — 2.3 per cent. However, researcher Eric Wallace noted that while the numbers may seem small, future (and larger) diffusion models “will remember more” and thus pose a greater privacy risk. A privacy threat from AI is real, and we have been warning about it as well. At the moment, there’s little we can do to protect ourselves from this threat, other than to share less of our personal data online.

US govt takes on Big Tech, wants Apple to allow sideloading apps

Following in the EU’s footsteps, the US government has urged lawmakers to pass legislation that would curb the dominance of Apple and Google in the app store market. The US Department of Commerce’s National Telecommunications and Information Administration (NTIA) issued a report arguing that the so-called gatekeepers “created unnecessary barriers and costs for app developers, ranging from fees for access to functional restrictions that favor some apps over others.” Both Google and Apple charge up to 30% commission on in-app purchases and require apps to undergo review processes. The NTIA described the latter as “slow and opaque”.

The report also took aim at Google and Apple’s argument that any restrictions they impose on developers and users are aimed at providing better security. “In some areas, such as in-app payments, it is unclear how the current system benefits anyone other than Apple and Google,” the NTIA said. Ultimately, it is consumers who suffer from “inflated” prices, lack of app choice and lack of innovation, the officials concluded. The NTIA recommends that the US Congress pass legislation that would require gatekeepers to allow users to sideload applications (currently impossible on the iPhone) and use alternative payment methods.

It’s about time someone other than the EU challenged the hegemony of Google and Apple on the app store market. The pressure from regulators is already forcing Apple to open up its closed ecosystem in the EU, and we would like to see this happening everywhere.

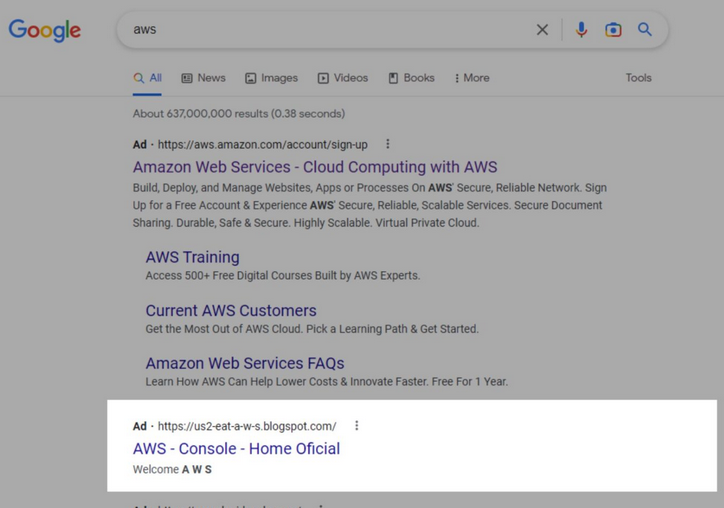

Cybercriminals use ‘fake’ Google ad to steal Amazon Web Services credentials

Both we and the FBI have previously warned about the dangers of Google search ads placed by impersonators of well-known companies. And unfortunately, despite Google’s assurances that it is cracking down on malicious ads, the threat is not going away. This time, cybercriminals targeted customers of Amazon Web Services, Amazon’s subsidiary with more than 1 million active users, many of them small and medium-sized businesses. According to an investigation by cybersecurity firm SentinelOne, malicious actors created a phishing website that looked like the AWS login page and placed an ad in Google Search that appeared just below the platform’s legitimate ad.

Image Source: SentinelOne

The ‘poisoned’ ad first led to the phishing domain itself, but subsequently the attackers replaced it with a proxy that was a copy of a legitimate confectionery blog. After the user clicked on the ad, the page loaded a second domain that redirected to a fake login page. This was presumably done to avoid detection by Google’s anti-fraud mechanisms. To further confuse the user, a legitimate AWS login page was displayed after the user entered credentials in a spoofed form. The researchers note that such attacks using Google search ads are easy to launch and therefore pose “a serious threat” to ordinary users, as well as network and cloud administrators. We wholly subscribe to this assessment.

One solution to this problem is to be aware of this type of malicious ads in the first place and use ad blockers, such as the AdGuard browser extension and the AdGuard app. If you’re using the AdGuard extension, you’ll need to enable the “block search ads” setting to not see search ads. For AdGuard apps, make sure you have the “Do not block search ads and self-promotion” check box unmarked.