AdGuard’s digest: Meta snaps at Apple, US privacy is controlled by lobbyists, and new data breaches

In this edition of AdGuard’s digest: Meta is up in arms over Apple’s attempt to spin the EU antitrust law to its own advantage, lobbyists are to blame for weak privacy laws in the US, Uber is to pay for a privacy breach, and AI-powered street surveillance lands an entire city in hot water.

No, thanks: Meta shelves app store plans after Apple’s ‘farcical’ EU rules

Mark Zuckerberg has put a stop to Meta’s plans to enter the app store market after Apple revealed how it intends to comply with the EU’s antitrust law, the Digital Markets Act (DMA). In an earnings call last week, Zuckerberg said he would be surprised if any developer accepted Apple’s new terms of service in the EU, which include a new “core technology fee.”

We wrote an article explaining why the Core Technology Fee, or CTF, is so punitive, especially for developers of free and freemium apps that have reached 1 million new installs in the past 12 months. In a nutshell, under the new terms, the devs of free apps would have to pay tens of thousands of dollars in fees to Apple simply for accepting the terms. This would give them the ability to list their apps in alternative app stores and use alternative payment methods, however, the fee applies even if the apps do not actually use any of these options. Zuckerberg called Apple’s master plan “onerous” and said it was “at odds with the intent of the EU regulation,” which was to open the door to more competition. “I think it’s just going to be very difficult for anyone — including ourselves — to really seriously entertain what they’re doing there.

The Apple’s DMA compliance plan has drawn backlash from a number of developers, including Spotify, which called it “total farce,” Epic Games, and Mozzilla. We also think that Apple gave users a false choice, instead of a meaningful one, and we hope the EU regulators step in to force Apple comply with the spirit of the antitrust law and not just its letter.

Lobbyists are shaping state privacy laws in the US, report claims

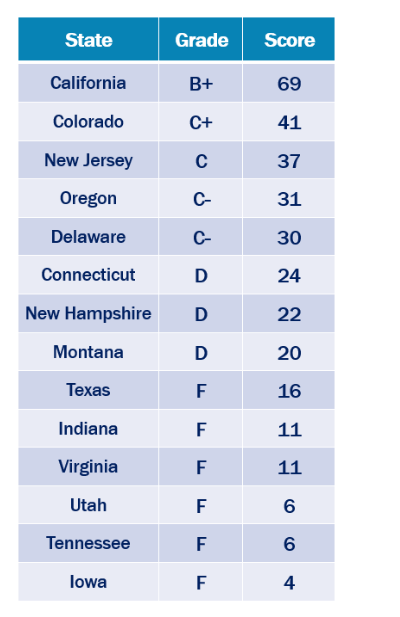

A new report from the Electronic Privacy Information Center (EPIC) and U.S. PIRG Education Fund has found that of the 14 states that have passed comprehensive privacy laws, 13 “closely follow a model drafted by industry giants.” The result of this close collaboration between tech giants and lawmakers is that most of these laws are weak, the researchers say. Privacy laws are graded from A to F, and while California emerged at the top of the class, it still had to contend itself with a B+ score.

The report says that while there have been efforts to strengthen privacy protections across the US, they have faced resistance from lobbyists who have been successful “nearly everywhere.” The researchers argued that the laws currently in place protect privacy “in the name only.” A real step toward more privacy would be to prohibit big tech companies from hoarding data by limiting the amount of personal information they can collect. Laws to do just that are currently being debated in Illinois, Massachusetts, Maine, and Maryland, but they are not a done deal, the researchers say.

We fully share the concerns and sentiments expressed in the report — in the absence of a federal privacy law, states are left to fend for themselves when it comes to protecting the privacy of their residents. And often those efforts are derailed by opposition from tech giants. And while it’s encouraging that state legislatures are moving forward with stronger privacy protections, there’s still a lot of work to be done.

Uber is fined €10M for privacy violations

Uber, the popular ride-hailing company, has been slapped a €10M privacy fine for violating the rights of French taxi drivers. The fine stems from a complaint filed by the French drivers’ association, which was handled by the Dutch data protection authority, where Uber’s European office is located.

The Dutch regulator found that Uber failed to make the data about drivers readily available to them, and was not clear about its data retention policies. On top of that, the US company failed to inform the drivers about the security measures it implements when it transferred the data outside of the region. “Transparency is a fundamental part of protecting personal data. If you don’t know how your personal data is being handled, you can’t determine whether you are being put at a disadvantage or treated unfairly. And you can’t stand up for your rights,” the Dutch regulator’s chairman said.

It’s not the first time Uber is facing repercussions for its lax privacy practices. Last year, Uber’s ex-CSO Joe Sallivan narrowly escaped prison time after he attempted to cover up a massive data breach. The data breach occurred back in 2016, exposing personal information (including driver’s license numbers) of approximately 600,000 drivers. It seems that Uber has been learning from its mistakes, but still has room for improvement.

AI-addled surveillance lands a city in trouble

An Italian privacy watchdog has fined the city of Trento, accusing it of trampling on privacy laws with the way it used artificial intelligence to monitor city streets. The city of about 117,000 people must now pay a fine of 50,000 euros ($54,225) for collecting people’s personal data as part of several AI-powered projects, including “Marvel” and “Protector.”

In all the projects, the city would collect information in public through microphones and surveillance cameras, with the aim of preventing threats to public safety. The Italian privacy watchdog Garante found that the authorities would also collect and process data from social media, such as Twitter and YouTube, for the same purposes. All of this data collection was done without people’s knowledge or clear consent.

According to Reuters, this is the first time in Italy’s history that a municipality has been punished for breaking the law while conducting AI-enabled surveillance. But it’s unlikely to be the last. As AI advances and permeates new industries and fields, we expect that it will be incorporated into more law enforcement activities than ever before. And it’s hard to believe that all actors using the power of AI for intelligence will be properly punished for doing so.